Vice Chairman Donald L. Kohn

At the Conference on John Taylor's Contributions to Monetary Theory and Policy, Federal Reserve Bank of Dallas, Dallas, Texas

October 12, 2007

John Taylor Rules

The Role of Simple Rules in Monetary Policymaking

It is a pleasure and an honor to speak at this conference honoring John Taylor and his contributions to monetary theory and policy. As you have already heard from Chairman Bernanke and the other speakers today, John has made a number of seminal contributions to the field of macroeconomics. What has distinguished John's work, in my view, is that he takes policymaking in the real world seriously.1

Taking policymaking seriously involves understanding the constraints imposed on our decisions by partial information and incomplete knowledge of economic relationships. It also implies the use of empirically valid models that acknowledge the efforts of households and businesses to anticipate the future and maximize their welfare over time. In the late 1980s and early 1990s, macroeconomics was focused mainly on real business cycles and endogenous growth theory. During this period, John was one of a very small number of academic economists who continued to pursue research aimed at informing the conduct of monetary policy. John's Carnegie Rochester conference paper published in 1993 is an excellent example of this research.

Importantly, John's legacy to the Federal Reserve has not been confined to enhancing our understanding of monetary policy. In addition, he has turned out legions of students who have followed in his footsteps in their interest in policy. Many of them have spent time in the Federal Reserve, producing a rich array of contributions to policymaking and research.

John and I have spent countless hours discussing how the Federal Reserve arrives at decisions about monetary policy and how it should arrive at decisions. Those conversations began in earnest in the late 1980s, when John was on the Council of Economic Advisers, and they have continued to the present day. They have occurred not only in offices and classrooms in Washington and Stanford and at numerous conferences around the globe, but also around dinner tables in Washington and Palo Alto and on hiking trails from Vermont to Wyoming. Those conversations made me a better policy adviser and then policymaker, and they have had the added and very special bonus of allowing Gail and me to count John and Allyn among our friends. I can't think of a better way to honor John's contributions than to continue that discussion around the dinner tables of Dallas by reflecting on the role of simple rules in informing policymaking.

Three Benefits of Simple Rules in Monetary Policymaking

In his Carnegie Rochester conference paper, John considered a simple policy rule under which the nominal federal funds rate is adjusted in response to both the gap between real and trend gross domestic product (GDP) and the gap between the inflation rate and policymakers' target. Based on data for the previous few years, John calibrated the long-run target for inflation and the two parameters that determine the responsiveness of the federal funds rate to the two gaps. The equilibrium real interest rate was based on a longer history of actual real interest rates. In the handout, Figure 1A depicts the actual nominal funds rate and the Taylor rule prescriptions between 1987 and 1992, as presented in John's paper. Despite its simplicity, this policy rule fits the data remarkably well; it described a period of generally successful policymaking; and it adhered to the Taylor principle of adjusting the nominal rate more than one-for-one with changes in the inflation rate, so it provided a plausible template for future success. It is no wonder that John has been such a dedicated salesman and that his efforts have been so well received in academia and policy councils.

Following John's seminal contribution, many other economists have engaged in research on similar policy rules and, together with John, have identified several benefits of such rules in conducting monetary policy. I will elaborate on three of them.

The first benefit of looking at a simple rule like John's is that it can provide a useful benchmark for policymakers. It relates policy setting systematically to the state of the economy in a way that, over time, will produce reasonably good outcomes on average. Importantly, the emphasis is on levels and gaps, not growth rates, as inputs to the policy process. This emphasis can be a problem when a level, say of potential GDP, is in question, but in many respects it is also a virtue. For the United States, the two gaps relate directly to the legislative mandate of the Federal Reserve to achieve stable prices and maximum employment. Moreover, those two gaps fit directly into most modern macroeconomic theories, which tell us something about their relationship and how that relationship can be affected by the type of shock hitting the economy.

Model uncertainties make the simplicity of the rule particularly important for the policymaker because research suggests that the prescriptions from simple rules can be more robust than optimal-control policies. Optimal-control policies can depend critically on the exact specification of the model, and clearly there is no consensus about which model best describes the U.S. economy.

Federal Reserve policymakers are shown several versions of Taylor rules in the material we receive before each meeting of the Federal Open Market Committee (FOMC). I always look at those charts and tables and ask myself whether I am comfortable with any significant deviation of my policy prescription from those of the rules.

A second benefit of simple rules is that they help financial market participants form a baseline for expectations regarding the future course of monetary policy. Even if the actual policy process is far more sophisticated than any simple rule could completely describe, the rule often provides a reasonably good approximation of what policymakers decide and a framework for thinking about policy actions. Indeed, many financial market participants have used the Taylor rule to understand U.S. monetary policy over the past fifteen years. Investors and other market participants are going to form expectations about policy and act on those expectations. The more accurate and informed those expectations are, the more likely are their actions to reinforce the intended effects of policy.

A third benefit is that simple rules can be helpful in the central bank's communication with the general public. Such an understanding is important for the transmission mechanism of monetary policy. Giving the public some sense of how the central bank sees the output and inflation gaps and how they are expected to evolve will help it understand the central bank's objectives and how policymakers are likely to respond to surprises in incoming data.

Four Limitations of Simple Rules

Simple rules have limitations, of course, as benchmarks for monetary policy. To quote from John's Carnegie Rochester paper, "a policy rule can be implemented and operated more informally by policymakers who recognize the general instrument responses that underlie the policy rule, but who also recognize that operating the rule requires judgment and cannot be done by computer" (p. 198). In that context, four limitations of simple rules are important.

The first limitation is that the use of a Taylor rule requires that a single measure of inflation be used to obtain the rule prescriptions. The price index used by John in the Carnegie Rochester paper was the GDP price deflator. Other researchers have used the inflation measure based on the consumer price index (CPI). Over the past fifteen years, the Federal Reserve has emphasized the inflation rate as measured by changes in the price index for personal consumption expenditures (PCE). Many researchers have also explored the use of core price indexes, which exclude the volatile food and energy components, as better predictors of future inflation or as more robust indicators of the sticky prices that some theories say should be the targets of policy. To be sure, over long periods, most of these measures behave very similarly. But policy is made in the here and now, and the various indexes can diverge significantly for long stretches, potentially providing different signals for the appropriate course of monetary policy.

Second, the implementation of the Taylor rule and other related rules requires determining the level of the equilibrium real interest rate and the level of potential output; neither of them are observable variables, and both must be inferred from other information. John used 2 percent as a rough guess as to the real federal funds rate that would be consistent with the economy producing at its potential. But the equilibrium level of the real federal funds rate probably varies over time because it depends on factors such as the growth rate of potential output, fiscal policy, and the willingness of savers to supply credit to households and businesses. Inaccurate estimates of this rate will mislead policymakers about the policy stance required to achieve full employment. In a similar vein, real-time estimates of potential output can be derived in a number of ways and--as shown by Orphanides (2003) and others--they are subject to large and persistent errors. If policymakers inadvertently rely on flawed estimates, they will encounter persistent problems in achieving their inflation objective.

The third limitation of using simple rules for monetary policymaking stems from the fact that, by their nature, simple rules involve only a small number of variables. However, the state of a complex economy like that of the United States cannot be fully captured by any small set of summary statistics. Moreover, policy is best made looking forward, that is, on the basis of projections of how inflation and economic activity may evolve. Lagged or current values of the small set of variables used in a given simple rule may not provide a sufficient guide to future economic developments, especially in periods of rapid or unusual change. For these reasons, central banks monitor a wide range of indicators in conducting monetary policy. In his Carnegie Rochester paper, John mentioned the stock market crash of October 1987 as an example of how other variables can and should influence the course of monetary policy in some situations.

The final limitation I want to highlight is that simple policy rules may not capture risk-management considerations. In some circumstances, the risks to the outlook or the perceived costs of missing an objective on a particular side may be sufficiently skewed that policymakers will choose to respond by adjusting policy in a way that would not be justified solely by the current state of the economy or the modal outlook for output and inflation gaps.

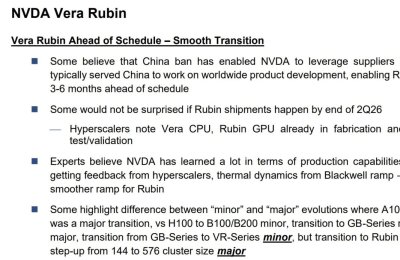

Policy Rules around 2003

Some of the ambiguities and potential pitfalls in the use of simple policy rules are highlighted by considering their prescriptions for a period earlier in this decade. Turning to Figure 1B, the solid line indicates the actual federal funds rate between the first quarter of 1993 and the second quarter of 2007, and the dashed line shows the prescriptions of the Taylor rule using the same methodology that John used in his Jackson Hole remarks this year.2 For the earlier part of the sample, the prescription from this simple rule tracks the actual funds rate relatively well. As John pointed out, a notable deviation happened beginning in 2002, and I would like to discuss that period to illustrate the limitations I noted earlier.

Inflation Measure

The first limitation is related to the measure used for the inflation variable included in the rules. The rule prescriptions depicted by the dashed line in Figure 1B are based on the headline CPI. But as you know, the FOMC often looks at core inflation, stripping out the effects of energy and food prices, as a better indicator of future price behavior. The dotted line represents the rule prescriptions based on the chain-weighted core CPI, which the Bureau of Labor Statistics has produced since 2000. Using this measure lowers the prescribed funds rate by about 2 percentage points during 2003, bringing the rule prescriptions much closer to the actual path of policy. The reason for the improvement is evident from Figure 2A, on the other side of the handout: Even though the headline and core CPI measures were broadly similar in the mid- to late 1990s, these measures diverged substantially between 2003 and 2005.

Potential Output

The second limitation relates to the challenge of judging the level of potential output in real time. To illustrate this point, Figure 2B plots three measures of the output gap. The solid line is the real-time estimate by the Congressional Budget Office (CBO) that was used in the Taylor rule prescriptions in Figure 1B, while the dashed line depicts the CBO's ex post estimate of the output gap as of the third quarter of 2007. Back in 2003, the CBO estimated that output at that time was below potential by only 1 percent. With the benefit of four more years of data, the CBO currently estimates that the output gap for the first half of 2003 was considerably wider--about 3 percent. In addition, the dotted line represents an alternative measure of resource utilization derived from the unemployment rate and an estimate of the natural rate of unemployment (NAIRU) taken from the Board staff's FRB/US model. In fact, the unemployment rate was rising through the middle of 2003, so the FOMC had every reason to believe that the output gap was widening at that time. Using this unemployment-based measure rather than the real-time CBO measure would reduce the prescriptions of simple policy rules by roughly 1/2 percentage point in early 2003.

Other Variables

The third limitation in my list was that the small set of economic measures included in simple rules may not fully reflect the state of the economy. Around 2003, financial market conditions may not have been adequately summarized by the assumed 2 percent equilibrium federal funds rate. Accounting scandals caused economic agents to lose confidence in published financial statements and in bond ratings. The result was higher uncertainty about the financial health of firms, and credit spreads widened substantially. Figure 2C shows that risk spreads on corporate bonds were elevated in this period. Other things equal, such spreads would reduce the federal funds rate needed to achieve full employment, perhaps explaining a portion of the gap between the actual federal funds rate and the outcome from the policy rule during this period.

Risk Management

The last item on my list of limitations was that simple rules do not take account of risk-management considerations. As shown in Figure 2A, the core CPI inflation rate for 2003 was falling toward 1 percent. The real-time reading of the core PCE inflation rate (not shown) was on average even lower than the comparable CPI figure. Given these rates, the possibility of deflation could not be ruled out. We had carefully analyzed the Japanese experience of the early 1990s; our conclusion was that aggressively moving against the risk of deflation would pay dividends by reducing the odds on needing to deal with the zero bound on nominal interest rates should the economy be hit with another negative shock. This factor is not captured by simple policy rules.

A Final Note

I have offered this analysis in the spirit of so many of the discussions I have had with John. His framework has been enormously important to policymaking in the Federal Reserve, and it has yielded many benefits. Nevertheless, it's important to keep in mind that some significant practical limitations also are associated with the application of such rules in real time. In other words, it's not so simple to use simple rules!

References

Orphanides, Athanasios (2003). "The Quest for Prosperity without Inflation," Leaving the Board Journal of Monetary Economics, vol. 50 (April), pp. 633-63.

Poole, William (2007). "Understanding the Fed (210 KB PDF)," Federal Reserve Bank of St. Louis, Review, vol. 89 (January/February), pp. 3-14, http://research.stlouisfed.org/publications/review/past/2007.

Taylor, John B. (1993). "Discretion versus Policy Rules in Practice," Leaving the Board Carnegie-Rochester Conference Series on Public Policy, vol. 39, pp. 195-214, http://econpapers.repec.org/article/eeecrcspp/default1993.htm.

_________ (2007). "Housing and Monetary Policy (244 KB PDF)," speech delivered at "Housing, Housing Finance, and Monetary Policy," a symposium sponsored by the Federal Reserve Bank of Kansas City, held in Jackson Hole, Wyo., August 30-September 1, www.kansascityfed.org/publicat/sympos/2007/pdf/2007.09.04.Taylor.pdf.

Footnotes

1. I am sure my colleagues join me in honoring John. However, my thoughts on policy rules are my own and not necessarily those of my colleagues on the Federal Open Market Committee. Jinill Kim and Andrew Levin, of the Board's staff, contributed to the preparation of these remarks.

2. Following John, the rule specification and the data used for the prescriptions closely follow the implementation of the Taylor rule in Bill Poole's speech in August 2006 (Poole, 2007). The inflation measure used for this rule is the four-quarter average headline CPI inflation rate, with the benchmark value set to 2 percent. Through 2001, the gap between real GDP and its potential is the value measured in real time by the staff of the Board of Governors. Because subsequent staff estimates of the output gap are not yet publicly available, the rule prescriptions for the post-2001 period are computed with the real-time output gap as constructed by the Congressional Budget Office.

At the Conference on John Taylor's Contributions to Monetary Theory and Policy, Federal Reserve Bank of Dallas, Dallas, Texas

October 12, 2007

John Taylor Rules

The Role of Simple Rules in Monetary Policymaking

It is a pleasure and an honor to speak at this conference honoring John Taylor and his contributions to monetary theory and policy. As you have already heard from Chairman Bernanke and the other speakers today, John has made a number of seminal contributions to the field of macroeconomics. What has distinguished John's work, in my view, is that he takes policymaking in the real world seriously.1

Taking policymaking seriously involves understanding the constraints imposed on our decisions by partial information and incomplete knowledge of economic relationships. It also implies the use of empirically valid models that acknowledge the efforts of households and businesses to anticipate the future and maximize their welfare over time. In the late 1980s and early 1990s, macroeconomics was focused mainly on real business cycles and endogenous growth theory. During this period, John was one of a very small number of academic economists who continued to pursue research aimed at informing the conduct of monetary policy. John's Carnegie Rochester conference paper published in 1993 is an excellent example of this research.

Importantly, John's legacy to the Federal Reserve has not been confined to enhancing our understanding of monetary policy. In addition, he has turned out legions of students who have followed in his footsteps in their interest in policy. Many of them have spent time in the Federal Reserve, producing a rich array of contributions to policymaking and research.

John and I have spent countless hours discussing how the Federal Reserve arrives at decisions about monetary policy and how it should arrive at decisions. Those conversations began in earnest in the late 1980s, when John was on the Council of Economic Advisers, and they have continued to the present day. They have occurred not only in offices and classrooms in Washington and Stanford and at numerous conferences around the globe, but also around dinner tables in Washington and Palo Alto and on hiking trails from Vermont to Wyoming. Those conversations made me a better policy adviser and then policymaker, and they have had the added and very special bonus of allowing Gail and me to count John and Allyn among our friends. I can't think of a better way to honor John's contributions than to continue that discussion around the dinner tables of Dallas by reflecting on the role of simple rules in informing policymaking.

Three Benefits of Simple Rules in Monetary Policymaking

In his Carnegie Rochester conference paper, John considered a simple policy rule under which the nominal federal funds rate is adjusted in response to both the gap between real and trend gross domestic product (GDP) and the gap between the inflation rate and policymakers' target. Based on data for the previous few years, John calibrated the long-run target for inflation and the two parameters that determine the responsiveness of the federal funds rate to the two gaps. The equilibrium real interest rate was based on a longer history of actual real interest rates. In the handout, Figure 1A depicts the actual nominal funds rate and the Taylor rule prescriptions between 1987 and 1992, as presented in John's paper. Despite its simplicity, this policy rule fits the data remarkably well; it described a period of generally successful policymaking; and it adhered to the Taylor principle of adjusting the nominal rate more than one-for-one with changes in the inflation rate, so it provided a plausible template for future success. It is no wonder that John has been such a dedicated salesman and that his efforts have been so well received in academia and policy councils.

Following John's seminal contribution, many other economists have engaged in research on similar policy rules and, together with John, have identified several benefits of such rules in conducting monetary policy. I will elaborate on three of them.

The first benefit of looking at a simple rule like John's is that it can provide a useful benchmark for policymakers. It relates policy setting systematically to the state of the economy in a way that, over time, will produce reasonably good outcomes on average. Importantly, the emphasis is on levels and gaps, not growth rates, as inputs to the policy process. This emphasis can be a problem when a level, say of potential GDP, is in question, but in many respects it is also a virtue. For the United States, the two gaps relate directly to the legislative mandate of the Federal Reserve to achieve stable prices and maximum employment. Moreover, those two gaps fit directly into most modern macroeconomic theories, which tell us something about their relationship and how that relationship can be affected by the type of shock hitting the economy.

Model uncertainties make the simplicity of the rule particularly important for the policymaker because research suggests that the prescriptions from simple rules can be more robust than optimal-control policies. Optimal-control policies can depend critically on the exact specification of the model, and clearly there is no consensus about which model best describes the U.S. economy.

Federal Reserve policymakers are shown several versions of Taylor rules in the material we receive before each meeting of the Federal Open Market Committee (FOMC). I always look at those charts and tables and ask myself whether I am comfortable with any significant deviation of my policy prescription from those of the rules.

A second benefit of simple rules is that they help financial market participants form a baseline for expectations regarding the future course of monetary policy. Even if the actual policy process is far more sophisticated than any simple rule could completely describe, the rule often provides a reasonably good approximation of what policymakers decide and a framework for thinking about policy actions. Indeed, many financial market participants have used the Taylor rule to understand U.S. monetary policy over the past fifteen years. Investors and other market participants are going to form expectations about policy and act on those expectations. The more accurate and informed those expectations are, the more likely are their actions to reinforce the intended effects of policy.

A third benefit is that simple rules can be helpful in the central bank's communication with the general public. Such an understanding is important for the transmission mechanism of monetary policy. Giving the public some sense of how the central bank sees the output and inflation gaps and how they are expected to evolve will help it understand the central bank's objectives and how policymakers are likely to respond to surprises in incoming data.

Four Limitations of Simple Rules

Simple rules have limitations, of course, as benchmarks for monetary policy. To quote from John's Carnegie Rochester paper, "a policy rule can be implemented and operated more informally by policymakers who recognize the general instrument responses that underlie the policy rule, but who also recognize that operating the rule requires judgment and cannot be done by computer" (p. 198). In that context, four limitations of simple rules are important.

The first limitation is that the use of a Taylor rule requires that a single measure of inflation be used to obtain the rule prescriptions. The price index used by John in the Carnegie Rochester paper was the GDP price deflator. Other researchers have used the inflation measure based on the consumer price index (CPI). Over the past fifteen years, the Federal Reserve has emphasized the inflation rate as measured by changes in the price index for personal consumption expenditures (PCE). Many researchers have also explored the use of core price indexes, which exclude the volatile food and energy components, as better predictors of future inflation or as more robust indicators of the sticky prices that some theories say should be the targets of policy. To be sure, over long periods, most of these measures behave very similarly. But policy is made in the here and now, and the various indexes can diverge significantly for long stretches, potentially providing different signals for the appropriate course of monetary policy.

Second, the implementation of the Taylor rule and other related rules requires determining the level of the equilibrium real interest rate and the level of potential output; neither of them are observable variables, and both must be inferred from other information. John used 2 percent as a rough guess as to the real federal funds rate that would be consistent with the economy producing at its potential. But the equilibrium level of the real federal funds rate probably varies over time because it depends on factors such as the growth rate of potential output, fiscal policy, and the willingness of savers to supply credit to households and businesses. Inaccurate estimates of this rate will mislead policymakers about the policy stance required to achieve full employment. In a similar vein, real-time estimates of potential output can be derived in a number of ways and--as shown by Orphanides (2003) and others--they are subject to large and persistent errors. If policymakers inadvertently rely on flawed estimates, they will encounter persistent problems in achieving their inflation objective.

The third limitation of using simple rules for monetary policymaking stems from the fact that, by their nature, simple rules involve only a small number of variables. However, the state of a complex economy like that of the United States cannot be fully captured by any small set of summary statistics. Moreover, policy is best made looking forward, that is, on the basis of projections of how inflation and economic activity may evolve. Lagged or current values of the small set of variables used in a given simple rule may not provide a sufficient guide to future economic developments, especially in periods of rapid or unusual change. For these reasons, central banks monitor a wide range of indicators in conducting monetary policy. In his Carnegie Rochester paper, John mentioned the stock market crash of October 1987 as an example of how other variables can and should influence the course of monetary policy in some situations.

The final limitation I want to highlight is that simple policy rules may not capture risk-management considerations. In some circumstances, the risks to the outlook or the perceived costs of missing an objective on a particular side may be sufficiently skewed that policymakers will choose to respond by adjusting policy in a way that would not be justified solely by the current state of the economy or the modal outlook for output and inflation gaps.

Policy Rules around 2003

Some of the ambiguities and potential pitfalls in the use of simple policy rules are highlighted by considering their prescriptions for a period earlier in this decade. Turning to Figure 1B, the solid line indicates the actual federal funds rate between the first quarter of 1993 and the second quarter of 2007, and the dashed line shows the prescriptions of the Taylor rule using the same methodology that John used in his Jackson Hole remarks this year.2 For the earlier part of the sample, the prescription from this simple rule tracks the actual funds rate relatively well. As John pointed out, a notable deviation happened beginning in 2002, and I would like to discuss that period to illustrate the limitations I noted earlier.

Inflation Measure

The first limitation is related to the measure used for the inflation variable included in the rules. The rule prescriptions depicted by the dashed line in Figure 1B are based on the headline CPI. But as you know, the FOMC often looks at core inflation, stripping out the effects of energy and food prices, as a better indicator of future price behavior. The dotted line represents the rule prescriptions based on the chain-weighted core CPI, which the Bureau of Labor Statistics has produced since 2000. Using this measure lowers the prescribed funds rate by about 2 percentage points during 2003, bringing the rule prescriptions much closer to the actual path of policy. The reason for the improvement is evident from Figure 2A, on the other side of the handout: Even though the headline and core CPI measures were broadly similar in the mid- to late 1990s, these measures diverged substantially between 2003 and 2005.

Potential Output

The second limitation relates to the challenge of judging the level of potential output in real time. To illustrate this point, Figure 2B plots three measures of the output gap. The solid line is the real-time estimate by the Congressional Budget Office (CBO) that was used in the Taylor rule prescriptions in Figure 1B, while the dashed line depicts the CBO's ex post estimate of the output gap as of the third quarter of 2007. Back in 2003, the CBO estimated that output at that time was below potential by only 1 percent. With the benefit of four more years of data, the CBO currently estimates that the output gap for the first half of 2003 was considerably wider--about 3 percent. In addition, the dotted line represents an alternative measure of resource utilization derived from the unemployment rate and an estimate of the natural rate of unemployment (NAIRU) taken from the Board staff's FRB/US model. In fact, the unemployment rate was rising through the middle of 2003, so the FOMC had every reason to believe that the output gap was widening at that time. Using this unemployment-based measure rather than the real-time CBO measure would reduce the prescriptions of simple policy rules by roughly 1/2 percentage point in early 2003.

Other Variables

The third limitation in my list was that the small set of economic measures included in simple rules may not fully reflect the state of the economy. Around 2003, financial market conditions may not have been adequately summarized by the assumed 2 percent equilibrium federal funds rate. Accounting scandals caused economic agents to lose confidence in published financial statements and in bond ratings. The result was higher uncertainty about the financial health of firms, and credit spreads widened substantially. Figure 2C shows that risk spreads on corporate bonds were elevated in this period. Other things equal, such spreads would reduce the federal funds rate needed to achieve full employment, perhaps explaining a portion of the gap between the actual federal funds rate and the outcome from the policy rule during this period.

Risk Management

The last item on my list of limitations was that simple rules do not take account of risk-management considerations. As shown in Figure 2A, the core CPI inflation rate for 2003 was falling toward 1 percent. The real-time reading of the core PCE inflation rate (not shown) was on average even lower than the comparable CPI figure. Given these rates, the possibility of deflation could not be ruled out. We had carefully analyzed the Japanese experience of the early 1990s; our conclusion was that aggressively moving against the risk of deflation would pay dividends by reducing the odds on needing to deal with the zero bound on nominal interest rates should the economy be hit with another negative shock. This factor is not captured by simple policy rules.

A Final Note

I have offered this analysis in the spirit of so many of the discussions I have had with John. His framework has been enormously important to policymaking in the Federal Reserve, and it has yielded many benefits. Nevertheless, it's important to keep in mind that some significant practical limitations also are associated with the application of such rules in real time. In other words, it's not so simple to use simple rules!

References

Orphanides, Athanasios (2003). "The Quest for Prosperity without Inflation," Leaving the Board Journal of Monetary Economics, vol. 50 (April), pp. 633-63.

Poole, William (2007). "Understanding the Fed (210 KB PDF)," Federal Reserve Bank of St. Louis, Review, vol. 89 (January/February), pp. 3-14, http://research.stlouisfed.org/publications/review/past/2007.

Taylor, John B. (1993). "Discretion versus Policy Rules in Practice," Leaving the Board Carnegie-Rochester Conference Series on Public Policy, vol. 39, pp. 195-214, http://econpapers.repec.org/article/eeecrcspp/default1993.htm.

_________ (2007). "Housing and Monetary Policy (244 KB PDF)," speech delivered at "Housing, Housing Finance, and Monetary Policy," a symposium sponsored by the Federal Reserve Bank of Kansas City, held in Jackson Hole, Wyo., August 30-September 1, www.kansascityfed.org/publicat/sympos/2007/pdf/2007.09.04.Taylor.pdf.

Footnotes

1. I am sure my colleagues join me in honoring John. However, my thoughts on policy rules are my own and not necessarily those of my colleagues on the Federal Open Market Committee. Jinill Kim and Andrew Levin, of the Board's staff, contributed to the preparation of these remarks.

2. Following John, the rule specification and the data used for the prescriptions closely follow the implementation of the Taylor rule in Bill Poole's speech in August 2006 (Poole, 2007). The inflation measure used for this rule is the four-quarter average headline CPI inflation rate, with the benchmark value set to 2 percent. Through 2001, the gap between real GDP and its potential is the value measured in real time by the staff of the Board of Governors. Because subsequent staff estimates of the output gap are not yet publicly available, the rule prescriptions for the post-2001 period are computed with the real-time output gap as constructed by the Congressional Budget Office.